While trying to understand why my latch-based implementation of the Busicom 141-PF calculator didn't work when implemented in a Spartan-6, I tested the i4001 ROM implementation separately. It seemed to work, so I focused on the i4004 CPU.

Now that I have the i4004 CPU switched back to using clocked flip-flops I tried a broader test. I instantiated one CPU, one ROM, and one RAM, and loaded the ROM with the basic functional test that is loaded by default into the i400x analyzer. This test starts with subroutine calls and returns, then checks conditional jumps before testing the ALU functions. My intent was to see whether there were enough flip-flop clock edges

per CLK1 or CLK2 pulse for everything to propagate as needed. It's the path through the ALU I'm most worried about.

I first started with behavioral simulation, and noted that the first few instructions executed as expected. This gave me confidence to try a Post-P&R simulation, which failed. Unlike the original failure, though, where the address placed on the 4-bit data bus alternated between 000 and 001, the ROM address on the bus appeared correct. However, the address presented to the Block RAM containing the instructions was always zero. This suggested the i4001 ROM emulation wasn't working.

This made no sense to me. I'd tested the latch-based i4001 in both Post-P&R simulation and a real Spartan-6 and it seemed to work just fine, but when combined with other modules it appears to fail.

In my career as a professional software engineer I've sometimes encountered code whose author claimed didn't work because of "a bug in the optimizer". Now, I've found a couple of very real compiler bugs, but they're extremely rare in commonly-used compilers. This sort of problem is almost invariably caused by the

programmer not understanding subtle details of the language, and

not by bugs in the compiler.

With this in mind I decided to convert the i4001 back to using edge-clocked flip-flops; something I sort-of expected to do anyway but hadn't gotten to.

Of course the thing worked immediately. I think I'm done playing with the latch-based implementation of any of these chips.

As I've said before, the problem I have with the edge-clocked flip-flop version of these chips is that the original design often assumes data can flow through multiple latches during a single CLK1 or CLK2 pulse. Since data can only propagate through a flip-flop on a clock edge, there must be more clock edges during a CLK1 or CLK2 pulse than there are flip-flops in series.

An instruction cycle is divided into eight subcycles using 8-stage shift registers that produce one-hot outputs (meaning only one of the outputs is active at any one time, uniquely identifying the subcycle). The shift register in the i4004 is self-initializing, and produces a SYNC signal output that is used by the shift registers in the i4001 and i4002 to synchronize themselves to the one in the i4004.

Rather than duplicate this critical code in several places I'd extracted both the timing generator and the timing recovery logic into separate Verilog modules. This allows me to test them individually, and use them to test other modules.

One of my concerns was that using edge-clocked flip-flops would result in a one-clock skew in the timing between the generator and the recovery outputs. This would eat into the number of clock edges seen by the flip-flops within a CLK1 or CLK2 "clock" pulse, and result in data not arriving in time or in tristate output driver overlaps.

Subcycle timing signals change in response to CLK1 going active. In my current design, CLK1 (and CLK2) is the output of a flip-flop. The flip-flop that drives CLK1 changes state in response to a rising clock edge, and thus lags the clock edge by about a nanosecond. That means that the flip-flops in the subcycle shift register

won't change state, because CLK1 hasn't yet changed when the rising clock edge occurs. Instead, the subcycle shift registers change state on the

next rising clock edge, causing a delay of one clock cycle time.

This, in turn, means that the logic that depends on the subcycle timing signal won't change until the rising clock edge after that, or

two clock edges after the one that caused the change in CLK1. That's two of my eight rising clock edges consumed already.

[Edit: It's actually only one clock edge. The combinational logic gets the entire period between the CLK1 pulse going active and the next clock edge to update, but the flip-flop doesn't act on the results until that clock edge occurs.]

See why I'm concerned about the possibility of a cycle of skew between the subcycle signals in the i4004 and other chips?

Fortunately, the shift registers in the i4001 and i4002 see the same timing relationship of as the shift register in the i4004. Thus the two shift registers

should stay synchronized with each other.

But after a series of failures I'm done making assumptions. The behavioral simulation looked good, but what about the Post-P&R simulation?

These screen captures shows the Post-P&R simulation waveforms. We can clearly see that the generated and recovered subcycle signals are in sync. Zooming in on this shows they change on the same clock edge.

Just to avoid disappointment later, I generated a bit file from this test and loaded it into the Spartan-6 on my P170-DH replacement board. The results shown on my logic analyzer match the Post-P&R simulation. I'd post a screen capture of this too, but having a resolution of 2ns rather than the 1ps of the simulation it's actually less interesting than those above.

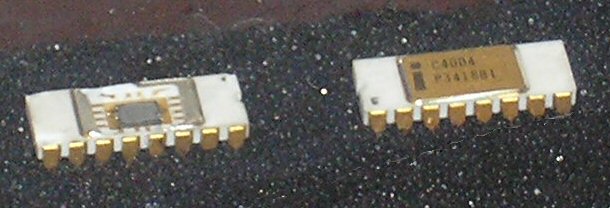

About the same time, I became aware that a group of engineers and computer history buffs had gotten Intel to release the schematics for first commercially-available microprocessor: the Intel 4004 CPU. They'd also retrieved the software that drove the first commercial product that used the i4004: the Busicom 141-PF.

About the same time, I became aware that a group of engineers and computer history buffs had gotten Intel to release the schematics for first commercially-available microprocessor: the Intel 4004 CPU. They'd also retrieved the software that drove the first commercial product that used the i4004: the Busicom 141-PF.